Artificial Intelligence is everywhere: drafting emails, approving loans, screening résumés, even recommending who we should date. The global AI market is expected to reach $1.3 trillion by 2032 (Precedence Research), making it one of the fastest-growing sectors in history.

But with this explosive adoption comes an uncomfortable question: what happens when we rely on AI too much? Just as smartphones reshaped human behavior in ways we never fully predicted, excessive AI use is revealing troubling unintended consequences. From ethical blind spots to workforce dependency, the story of AI isn’t just about progress, it’s about caution.

Algorithmic Bias: The Unseen Danger

AI systems are only as fair as the data they are trained on. A widely cited MIT study found that some facial recognition algorithms misidentified darker-skinned women at rates up to 34% higher than lighter-skinned men.

For businesses, this bias isn’t theoretical. Hiring platforms have rejected qualified candidates because of skewed training data. Banks have issued lower credit limits to women compared to men with identical financial profiles. In healthcare, biased algorithms have under-prioritized care for Black patients.

Unchecked, these invisible biases can reinforce existing inequalities on a global scale.

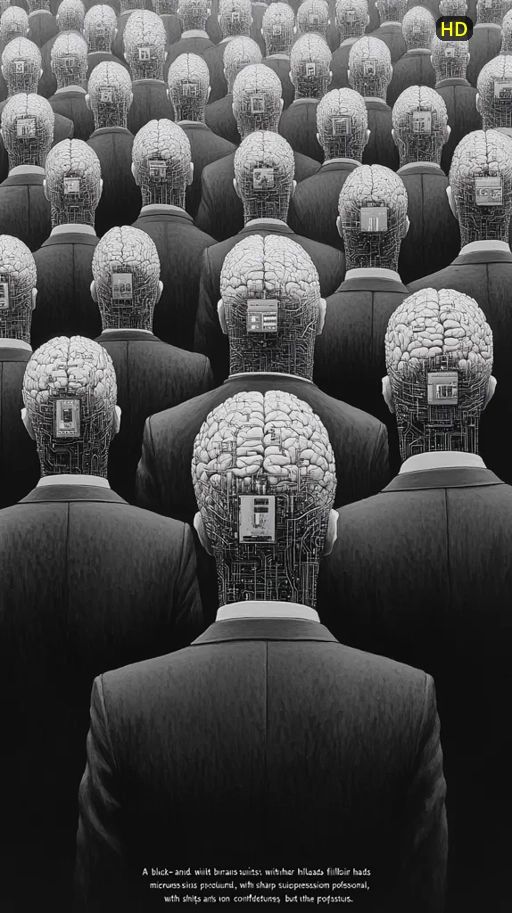

The Automation Addiction

AI promises efficiency but efficiency comes at a cost. A recent PwC survey revealed that 37% of employees now use AI tools daily, often without oversight. While this boosts productivity, it also fosters dependency.

Consider content creation. Generative AI can churn out articles, reports, and marketing copy in seconds. But if businesses over-rely on these tools, the risk is homogenization: a flood of bland, formulaic content that drowns out human creativity. Worse still, employees may lose critical thinking skills when they defer too much to the algorithm.

It’s the same danger as overusing GPS: we forget how to navigate on our own.

Privacy and Surveillance Risks

AI’s appetite for data is insatiable. Every interaction clicks, purchases, even voice recordings feeds machine learning systems. But who owns this data, and how is it being used?

In 2023, Italy temporarily banned ChatGPT over privacy concerns. Regulators in the EU and U.S. are increasingly scrutinizing how AI companies handle sensitive information. For consumers, constant surveillance creates what experts call a “chilling effect,” where people self-censor behavior because they feel watched.

The dark side of AI here is subtle but profound: a slow erosion of personal freedom in exchange for convenience.

Job Displacement and the Human Cost

The World Economic Forum estimates that AI and automation could replace 83 million jobs globally by 2030, even as they create 69 million new ones. The problem isn’t just job loss, it’s the speed of disruption.

Truck drivers, paralegals, translators, and even junior software engineers are seeing tasks increasingly automated. Without adequate reskilling programs, millions risk being left behind in the AI revolution.

History shows us that technological shifts always create winners and losers. The danger with AI is the acceleration: workers may not have time to adapt.

Mental Health and Digital Burnout

AI is designed to optimize, but humans aren’t machines. Constant exposure to algorithm-driven apps from TikTok feeds to workplace dashboards, can lead to information overload and decision fatigue.

Psychologists warn of a “dopamine trap,” where recommendation systems hijack attention, making users more distracted and less present in real life. For employees, the pressure to keep up with AI-enhanced productivity can cause anxiety, imposter syndrome, and burnout.

The irony? AI tools meant to make us more efficient may actually be making us less well.

Navigating AI Responsibly

The solution isn’t to reject AI, but to use it mindfully. Responsible adoption requires:

- Human oversight: AI should assist decisions, not replace them entirely.

- Bias audits: Companies must test and correct for discriminatory outcomes.

- Digital hygiene: Limiting screen time and creating “AI-free” zones can protect mental health.

- Reskilling initiatives: Governments and businesses need to invest in preparing workers for new roles.

As Dr. Timnit Gebru, a leading AI ethicist, notes: “AI doesn’t have to be oppressive. But if left unchecked, it will replicate the power structures that already exist.”

Conclusion: A Call for Balance

AI is not inherently dangerous but our overreliance on it could be. Like any powerful technology, the key lies in balance. Too much AI risks making societies more unequal, workers less skilled, and humans less free.

The dark side of AI isn’t about the machines themselves. It’s about us and the choices we make in shaping how they’re used.